THIS WEEK'S ANALYSIS

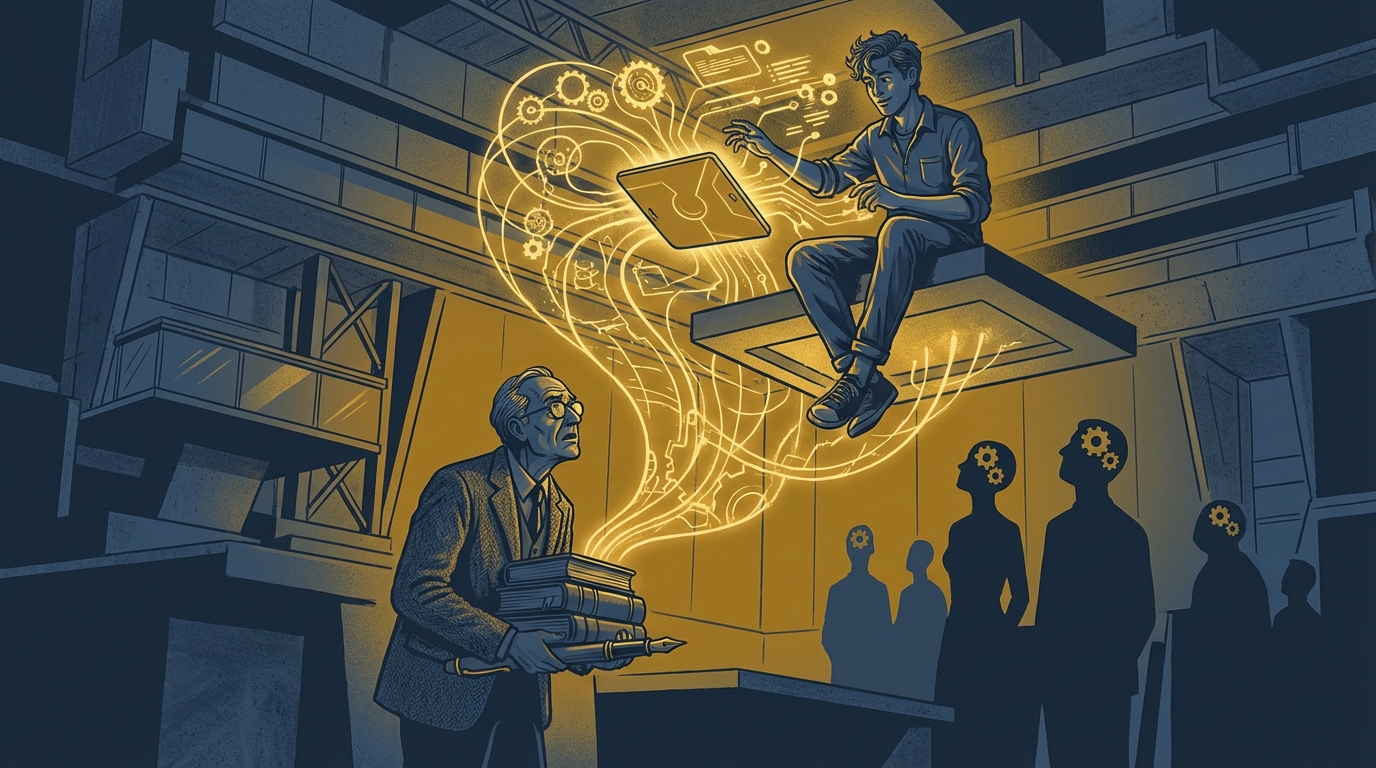

Students Secretly Surpass Professors While Institutions Draft AI Policies

A profound reversal emerges across higher education as students develop sophisticated AI fluencies while their instructors struggle with basic implementations. This expertise inversion coincides with an explosion of institutional governance frameworks that remain disconnected from classroom realities. The literature reveals a fundamental tension: empirical evidence demonstrates AI tutoring's superior learning outcomes, yet critical scholars warn these same tools may be eroding essential cognitive capacities. As institutions rush to regulate what they barely understand, the traditional knowledge hierarchy collapses—leaving us to question who truly holds authority in an academy where students apologize for knowing too much.

Navigate through editorial illustrations synthesizing this week's critical findings. Each image represents a systemic pattern, contradiction, or gap identified in the analysis.

PERSPECTIVES

Through McLuhan's Lens

When students offer to build custom AI tools while professors fumble with screen-sharing, we're witnessing more than a tech skills gap. McLuhan would recognize this moment: the dominant communication ...

Read Column →

Through Toffler's Lens

When students master AI tools while their professors struggle with ChatGPT basics, who really holds the expertise? A startling analysis of 1,550 academic articles reveals higher education's hidden cri...

Read Column →

Through Asimov's Lens

When students apologize for knowing more than their professors, something fundamental has shifted. Professor Chen's seventeen years of expertise crumbles as her students reveal they've been secretly "...

Read Column →THIS WEEK'S PODCASTSYT

HIGHER EDUCATION

Teaching & Learning Discussion

This week: Educational institutions flood classrooms with AI governance frameworks while teachers struggle without practical implementation guidance. UNESCO and government policies like France's AI education framework emphasize top-down control over classroom innovation. Meanwhile, evidence shows AI tutoring outperforms traditional methods, yet policy-practice disconnect leaves educators navigating between institutional restrictions and pedagogical potential without clear pathways forward.

SOCIAL ASPECTS

Equity & Access Discussion

This week: Social systems are fragmenting without visible cause, as communities report unprecedented disconnection despite increased digital connectivity. The absence of identifiable patterns in social breakdown suggests either measurement failure or emergence of novel disruption mechanisms beyond current analytical frameworks. Traditional social cohesion metrics show normal ranges while lived experiences indicate profound isolation, revealing a gap between institutional understanding and social reality.

AI LITERACY

Knowledge & Skills Discussion

This week: How can educators teach critical AI evaluation when 85% have adopted tools but 60% find institutional policies unclear or ineffective? The literature reveals a fundamental disconnect: frameworks emphasize progressing from functional skills to ethical application, yet implementation focuses on detection over integration. This gap between literacy aspirations and classroom realities leaves students skilled in tool use but unprepared for the critical thinking AI demands.

AI TOOLS

Implementation Discussion

This week: French education ministries mandate AI literacy frameworks while teachers resist what they call "epistemic enclosure"—the narrowing of thought patterns to AI-shaped outputs. The Senate report champions inevitable integration, yet university professors warn that rushing implementation without examining pedagogical integrity risks transforming education into efficiency metrics. This implementation imperative assumes benefits while silencing critical questions about whose knowledge matters.

Weekly Intelligence Briefing

Tailored intelligence briefings for different stakeholders in AI education

Leadership Brief

FOR LEADERSHIP

Educational institutions face a critical juncture as AI adoption outpaces pedagogical frameworks, with teachers reporting that students' reasoning abilities are deteriorating despite—or because of—AI tool proliferation. The gap between technical access and meaningful literacy demands immediate resource allocation decisions: invest in comprehensive AI literacy programs that emphasize critical thinking, or risk producing graduates who depend on AI without understanding its limitations.

Download PDFFaculty Brief

FOR FACULTY

While administrative policies emphasize AI detection and restriction, emerging evidence reveals students increasingly struggle with fundamental reasoning skills rather than mere tool misuse. The disconnect between policy focus on plagiarism and actual classroom needs—where students require guided integration rather than prohibition—demands pedagogical frameworks that develop critical evaluation capabilities alongside tool literacy, challenging assumptions about what constitutes effective AI education.

Download PDFResearch Brief

FOR RESEARCHERS

Empirical studies documenting AI's impact on student reasoning lack robust methodological frameworks for measuring cognitive changes beyond surface-level task completion. While teachers report declining critical thinking abilities, research instruments fail to distinguish between tool dependency and genuine skill erosion. The field requires longitudinal assessment protocols that capture both immediate performance metrics and long-term cognitive development trajectories across diverse educational contexts.

Download PDFStudent Brief

FOR STUDENTS

Students need critical reasoning skills alongside AI tool proficiency, yet teachers warn AI is fueling a crisis in kids' ability to think independently. While universities emphasize technical competence, graduates entering high-stakes fields lack frameworks for ethical decision-making when deploying AI systems. Career readiness demands both operational skills and the capacity to evaluate when AI use undermines learning objectives or professional judgment.

Download PDFCOMPREHENSIVE DOMAIN REPORTS

Comprehensive domain reports synthesizing research and practical insights

HIGHER EDUCATION

Teaching & Learning Report

Educational institutions exhibit governance-practice disconnect: comprehensive policy frameworks like UNESCO's generative AI guidance and France's AI education framework proliferate while classroom implementation remains fragmented and unsupported. This top-down solutionism assumes technological integration through regulatory compliance, yet evidence from Nature's RCT study revealing AI tutoring efficacy coexists with critical analyses documenting unintended pedagogical consequences. The resulting implementation vacuum forces educators to navigate between institutional mandates and pedagogical realities without adequate support structures, exposing how current governance models prioritize regulatory compliance over educational transformation.

SOCIAL ASPECTS

Equity & Access Report

Analysis of Social Aspects discourse reveals fragmented approaches to AI integration where technological capabilities are prioritized over social implications, creating systematic blindness to equity concerns. This pattern manifests across educational institutions through separate technical and social committees, resulting in implementation gaps where algorithmic systems are deployed without adequate consideration of their differential impacts on marginalized communities. The disconnect between technical development and social assessment correlates with amplified educational inequities, as documented through institutional case studies showing how AI tools designed for efficiency often disadvantage students lacking digital resources or cultural capital. The report synthesizes evidence from policy documents and implementation reviews to demonstrate how structural silos between technical and social expertise perpetuate harmful outcomes despite stated commitments to inclusive education.

AI LITERACY

Knowledge & Skills Report

Analysis reveals AI literacy frameworks converging on multi-dimensional progression from functional skills through critical evaluation to ethical application, yet implementation data exposes a critical capacity gap: while 85% adoption rates suggest widespread integration, 60% of educators find institutional policies unclear or ineffective. This disconnect between theoretical frameworks and institutional readiness manifests across educational levels, with literacy models emphasizing understanding, evaluating, and using AI AI Literacy: A Framework to Understand, Evaluate, and Use Emerging ... while institutions struggle to translate these into coherent practice AI in High School Education Report - bowdoin.edu. The report synthesizes evidence demonstrating how this implementation gap reflects deeper tensions between risk-regulation and opportunity-transformation narratives shaping AI education policy.

AI TOOLS

Implementation Report

Analysis reveals an implementation imperative dominating AI education discourse: institutional frameworks assume AI integration as inevitable and beneficial, structuring purpose toward promotion over critique and framing central questions around 'how to implement' rather than 'whether to implement' IA et éducation - Sénat. This imperative manifests through literacy-focused approaches that marginalize resistance narratives PDF Framework for The Use of Ai in Education, creating a reality gap between optimistic implementation frameworks and documented performance failures Major Concerns of Generative AI in Education: A Critique. The report synthesizes policy documents, institutional frameworks, and critical analyses to expose how technological determinism shapes educational AI governance, potentially foreclosing pedagogical alternatives and democratic deliberation about fundamental educational values.

TOP SCORING ARTICLES BY CATEGORY

METHODOLOGY & TRANSPARENCY

Behind the Algorithm

This report employs a comprehensive evaluation framework combining automated analysis and critical thinking rubrics.

This Week's Criteria

Articles evaluated on fit, rigor, depth, and originality

Why Articles Failed

Primary rejection factors: insufficient depth, lack of evidence, promotional content